SEPTEMBER 3, 2025

Scalable realtime token prices with Birdeye WebSockets and Supabase Realtime

Edgar Pavlovsky

Edgar Pavlovsky

People care about price the most when it changes the fastest. Realtime price delivery is table stakes for a trading product, but doing it well at startup speed is non-trivial. Our approach with Scout is simple, robust, and scales cleanly with user growth.

Architecture overview

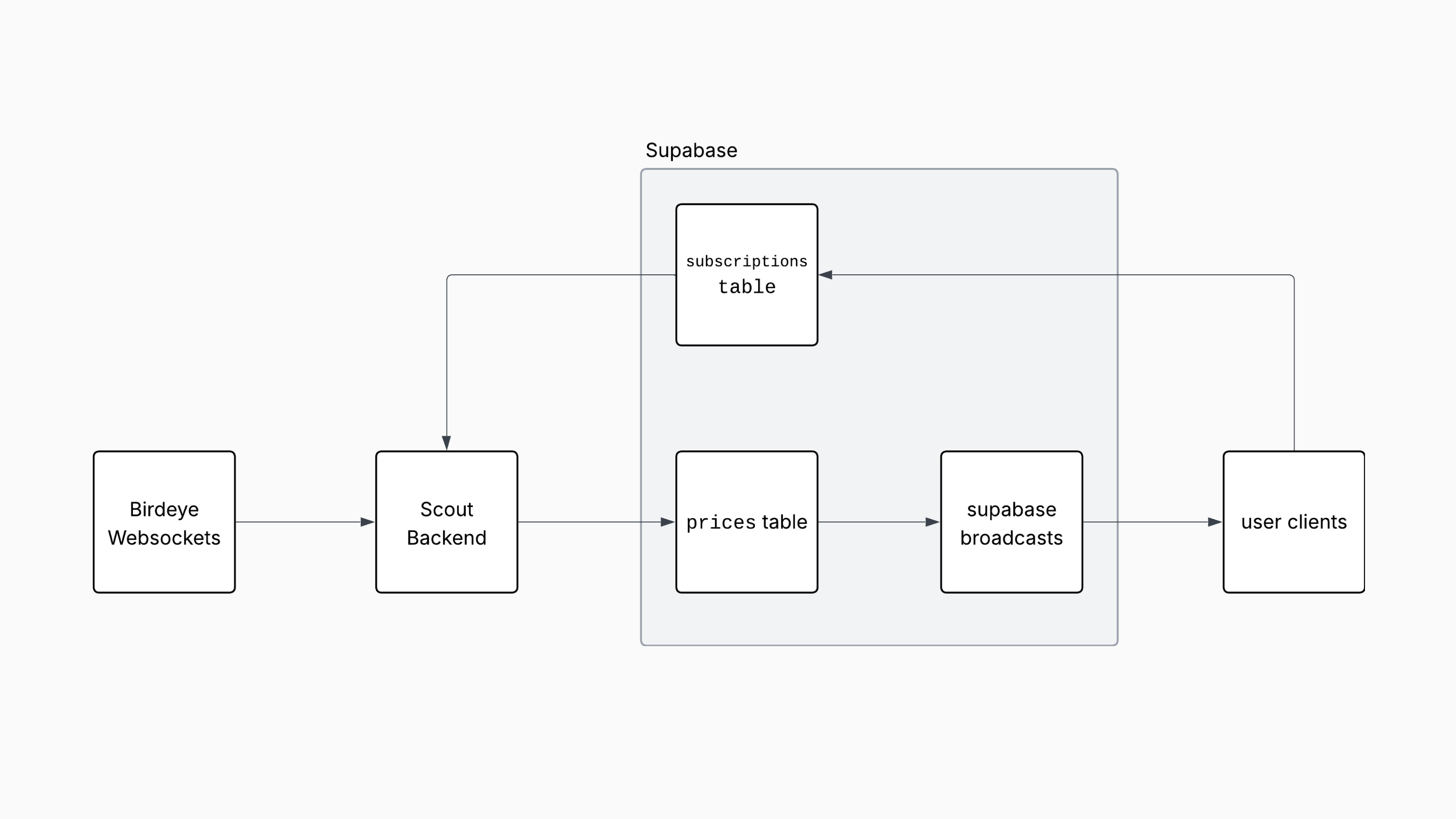

At a high level, our pipeline looks like this:

- Birdeye WebSockets stream live ticks and token events.

- Subscription Service multiplexes client interest into a minimal set of upstream subscriptions, deduplicates by token/timeframe, and writes normalized updates to Supabase (Postgres).

- Supabase Realtime broadcasts row-level changes to all connected clients instantly.

One guiding principle shapes the system: the price service only writes to Supabase. Delivery to clients is handled by Supabase Realtime. This clean separation keeps our code simple and makes behavior easy to reason about.

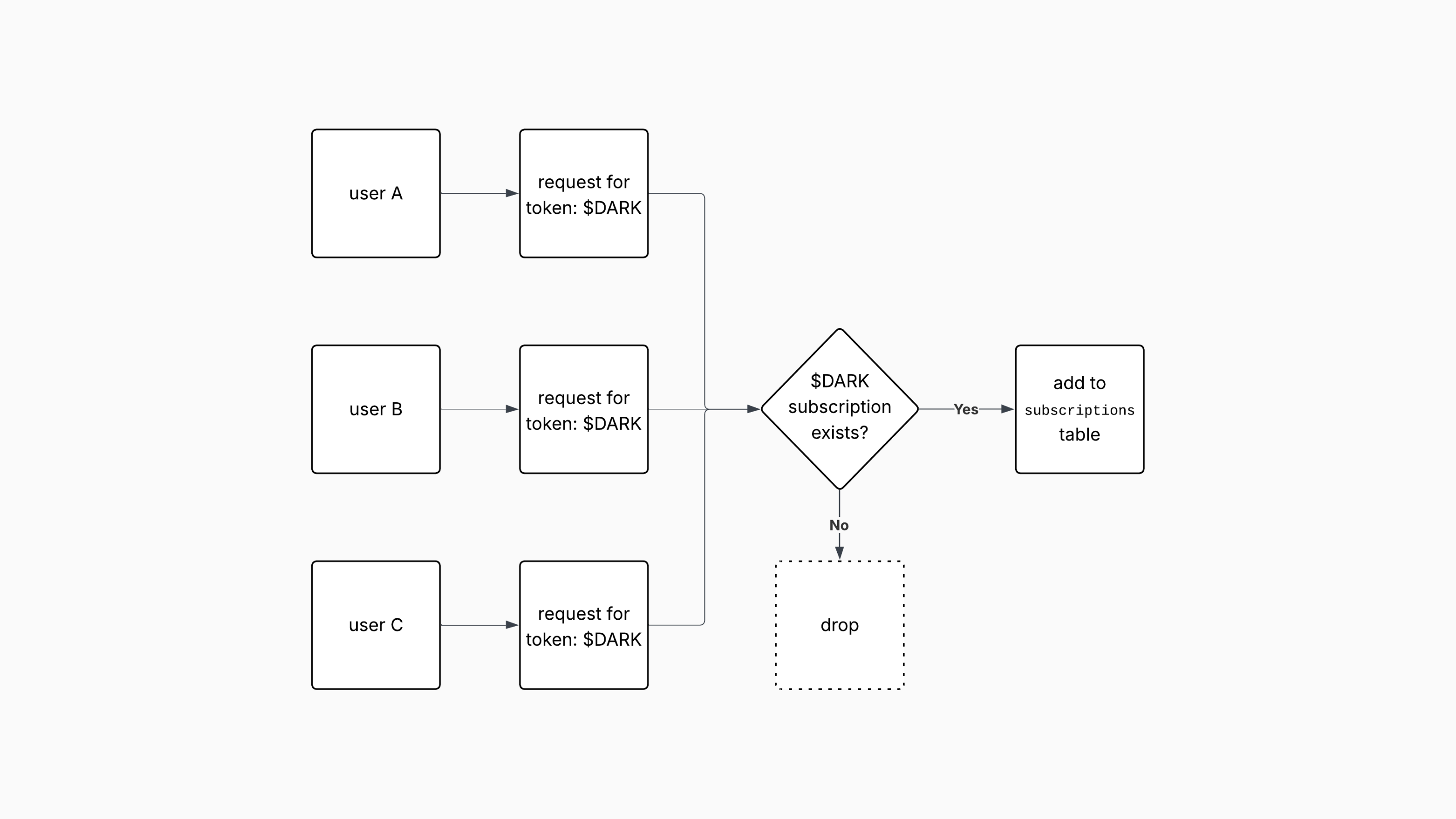

Why this scales with users

The naive implementation is to open one upstream WebSocket per client per token. That explodes with user count. We do the opposite. Clients express interest to our service; we coalesce all requests and maintain efficient upstream connections that bundle multiple tokens. Birdeye's WebSocket supports up to 100 token subscriptions per connection, so whether one user or one thousand are watching, the upstream load stays minimal.

- Multiplexed upstream: up to 100 tokens per Birdeye WebSocket connection, dramatically reducing connection overhead.

- Fan-out at the database edge: a single write becomes many client messages via Supabase Realtime.

- Backpressure safety: if a client disconnects, nothing thrashes upstream; we simply stop sending them broadcasts.

Cost scales with tokens tracked, not with users online. That is the core win. It also means we can keep the service lean: less connection churn, fewer reconnection storms, and simpler horizontal scaling.

Data model and writes

Incoming events are normalized into a compact schema and upserted into Postgres. We keep the write path small and predictable: insert the latest price, rolling stats, and a heartbeat. Nothing fancy in the hot path. Postgres now becomes the single source of truth for price state.

Because Supabase Realtime rides on logical replication, each write immediately turns into a broadcast that the browser can subscribe to with row or channel filters. This keeps the client code tiny and the UX snappy.

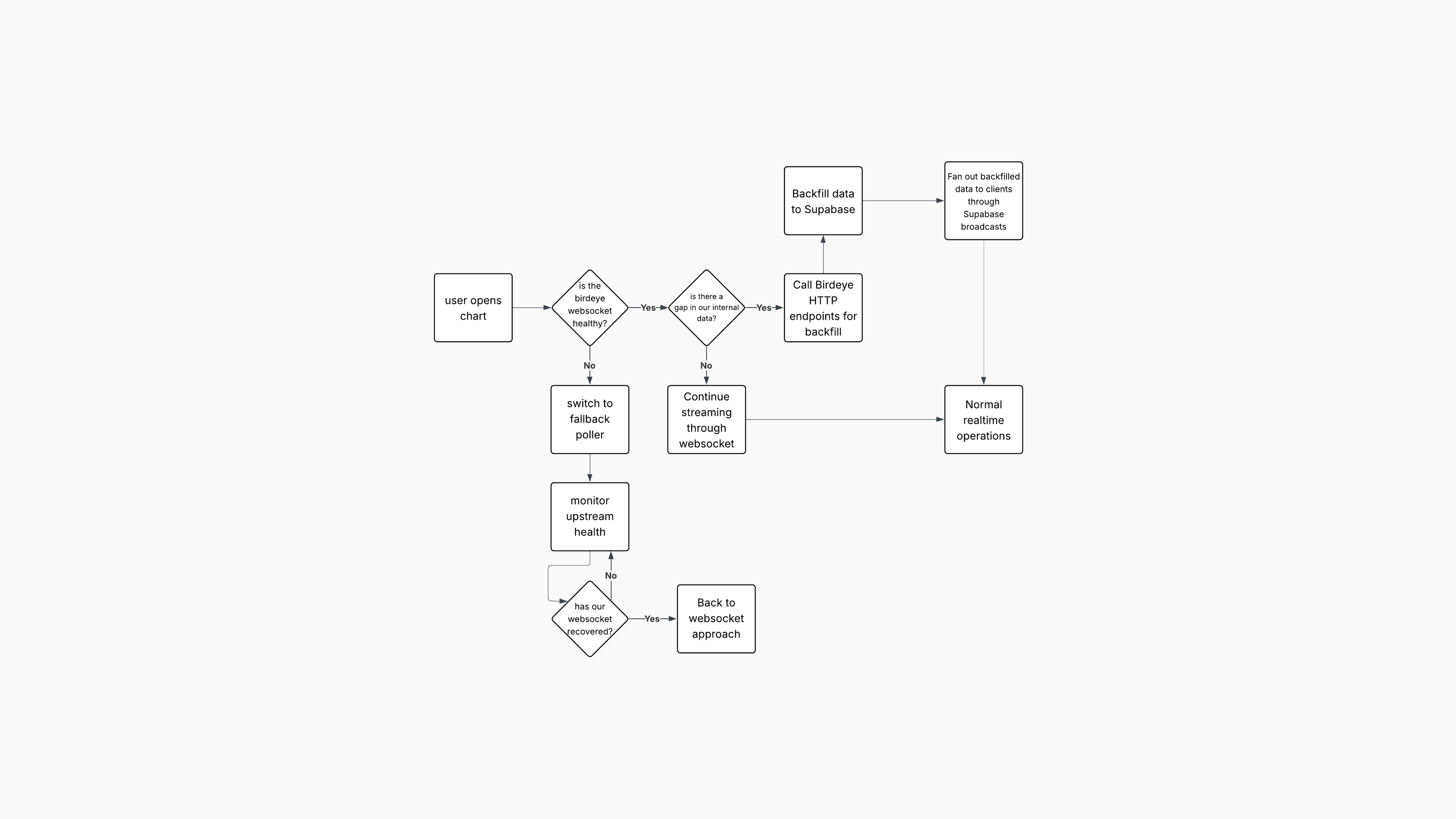

Backfill, gaps, and graceful fallbacks

WebSockets are great for the present, but every realtime system needs a plan for the moments before a client subscribes or when a network blip happens. Our backfill strategy is conservative and bandwidth-aware:

- Only when needed: on cold start or upon detecting a gap, we call Birdeye HTTP endpoints to backfill the minimal range.

- Session-aware: a session monitor decides whether to rely on WebSocket continuity or perform a short backfill.

- Write-once: even during backfill, the service writes to Supabase and lets Realtime fan it out. No direct client pushes.

- Health and fallbacks: if an upstream hiccups, we temporarily lean on a fallback poller, then return to WebSockets once healthy.

Operational notes

- Connection health: we track upstream availability and auto-resubscribe with jitter to avoid thundering herds.

- Idempotent writes: upserts keyed by token/timeframe prevent duplicates; small unique constraints defend hot paths.

- Cold cache wins: new users instantly see state thanks to Postgres reads; Realtime catches them up in milliseconds.

- Server simplicity: the subscription layer is a stateless worker pool; durable state lives in Postgres.

Why Supabase makes sense early (and where popular alternatives like ClickHouse shine)

For an early-stage team, Supabase is a sweet spot: Postgres under the hood, hosted, and fast to start. You get strong consistency, a familiar schema-first workflow, and a production-grade Realtime channel with almost no lift. The outcome is speed: fewer moving parts, less glue code, and a single operational surface.

There are mature alternatives like ClickHouse that are excellent for high-volume analytical workloads. ClickHouse is exceptional for large-scale time series aggregation and long-range queries. If your workload leans toward heavy analytics and multi-dimensional slicing at terabyte scale, it’s a great choice. Our current hot path is dominated by fresh price writes and fan-out to clients; Postgres + Realtime excels here with minimal complexity. As we add more historical analytics or higher cardinality aggregates, introducing ClickHouse alongside Postgres becomes a straightforward, incremental step.

Developer experience

On the client, subscribing is a one-liner. No custom socket plumbing, no bespoke multiplexing protocols. On the server, the subscription service focuses on two jobs: deduplicate upstreams and write cleanly to the database. The result is code that is easy to debug and easy to scale.

Looking ahead

The core loop works well today. It's an early stage stack tuned for speed and clarity, and it has served us well. From here, the plan is to keep the hot path simple while we tighten freshness and accuracy.

Over time we will move closer to the blockchain for lower latency - it's the proper way to create a truly world class experience. There are several practical paths: read directly from Solana via read only RPCs, or use managed streams like Helius LaserStream or Triton's Yellowstone Fumarole. Direct RPC access gives fine control over which events we index and how fast we respond to token mints, pool creation, and DEX trades. The tradeoff is operational: reliable capacity, surge planning, and gap recovery add real work.

LaserStream is powerful - it provides a low latency stream of Solana events with helpful capabilities like historical replay from a chosen starting slot, multi node reliability to avoid single points of failure, and protocol flexibility via gRPC (and soon WebSockets). Fumarole takes a similar approach but adds persistent streaming with guaranteed data delivery - it tracks your position in the stream and ensures network disconnections won't cause data loss, automatically resuming from where you left off. Both services can simplify our gap handling and backfill logic while improving p95 latency for token creation detection and trade based price updates, fitting neatly in front of our existing pipeline where we consume events, derive minimal state, and write to Postgres so Supabase Realtime handles fan out to clients.

The incremental roadmap is straightforward. Keep Birdeye as the aggregate feed that powers the hot path today while we introduce a parallel on-chain source for faster new token discovery and OHLCV data. Unify both sources behind the same write-once discipline to Supabase, measure end to end latency and accuracy, and promote the best performing path as the default. Clients do not change. Our subscription deduplication and Realtime broadcast model carry forward unchanged.

K.I.S.S.

Simple systems scale. By keeping the hot path tiny and relying on Postgres and Supabase Realtime for fan out, we deliver realtime prices reliably while keeping our architecture easy to operate.